Reining in the Robots: How New Guidelines Aim to Protect Websites

If these guidelines are approved, it will be great... but like the rules of the road, they only work if the crawlers follow them.

TL/DR: A group comprising Gary Illyes, Dr. Mirja Kühlewind, and AJ Kohn has proposed best practices under the Internet Engineering Task Force (IETF) to improve crawler behaviour. Although voluntary, if approved, this new directive will hopefully be a step forward for website owners trying to control crawlers.

If you’ve ever wondered how Google finds your website or how ChatGPT learned to write like a human, the answer starts with something called a web crawler.

Think of a web crawler as a digital librarian that visits websites, reads their content, and takes notes.

These automated programs (also called bots or spiders) work 24/7, hopping from link to link across the internet, collecting information for search engines, AI training, and other purposes.

Here’s a simple example: When you publish a new blog post, Google’s crawler (called Googlebot) eventually visits your site, reads that post, and adds it to Google’s massive index; visit this for the more detailed version.

That’s how your content shows up when someone searches for topics you’ve written about.

Why We Need Rules for Crawlers

A well-behaved crawler is like a polite guest who knocks before entering and doesn’t overstay their welcome.

A badly-behaved crawler can be catastrophic, essentially hammering your server with so many requests that your website slows to a crawl or crashes entirely (taking your business offline in the process).

With the explosion of AI companies training their models on web content, crawler traffic has increased dramatically.

Some website owners have reported their servers getting pounded by dozens of different AI crawlers and various bots, each one gobbling up bandwidth and computing resources without asking permission.

It’s like having uninvited guests show up to your house party, eat all your food, and leave without saying thank you.

“For the first time in a decade, automated traffic surpassed human activity, accounting for 51% of all web traffic” (src. Thales)

Partly because of this, Cloudflare, a global cloud services company that provides security, performance, and reliability solutions for websites, has been very publicly trying to control the access AI crawlers have to its websites.

Cloudflare is even backing a method of being paid in exchange for allowing AI to utilize the content on your website; it’s called the RSL Collective (sign up for free!).

Reddit, People Inc., Yahoo, Internet Brands, Ziff Davis, Fastly, Quora, O’Reilly Media, and Medium among first to support new Really Simple Licensing (RSL) standard, available to any website for free today, to define licensing, usage, and compensation terms for AI crawlers and agents. (src. RSL Standard)

With all of that said, three people (Gary Illyes, Dr. Mirja Kühlewind, and AJ Kohn) have taken another direction.

They drafted new best practices for crawler operators under the umbrella of the Internet Engineering Task Force (IETF). These guidelines aim to create a voluntary standard that separates the good crawlers from the bad ones.

Let’s break down what really matters in this “Crawler best practices” document.

The Six Rules That Actually Matter

1. Respect the Robots.txt File

Every website can create a file called robots.txt (it sits at yoursite.com/robots.txt) that tells crawlers which parts of the site they can and cannot visit. It’s essentially a “Do Not Enter” sign for specific areas of your website.

The new guidelines make it clear: all crawlers must respect this file. Period. If you tell a crawler not to access your private customer portal or your staging site, it should listen. Sadly, not all crawlers follow this rule today, which is exactly why this document matters.

Additionally, crawlers need to respect something called the X-robots-tag in the HTTP header (technical instructions your server sends with each page). This gives you another way to control crawler access, especially if you don’t want to use a robots.txt file.

2. Identify Yourself Clearly

Imagine someone knocking on your door wearing a mask.

You probably wouldn’t let them in, right? The same principle applies to crawlers.

Every crawler must include a clear identifier in something called the user-agent string (essentially the crawler’s name tag). This identifier should tell you who operates the crawler and provide a link to more information. For example, Google’s crawler identifies itself and links to a page explaining what it does.

The name should make it obvious who owns the crawler and what it’s doing. If a crawler is collecting data to train an AI model, it should say so. No hiding behind vague names or anonymous identities.

3. Don’t Break the Website

This is the big one.

Crawlers must include logic that backs off when they detect they’re causing problems.

If your server starts returning error messages (like the dreaded 503 “Service Unavailable” error), the crawler should slow down or stop entirely.

Smart crawlers go even further.

They monitor how long your server takes to respond and adjust their speed accordingly. If responses that normally take 100 milliseconds suddenly take 2 seconds, that’s a sign the server is struggling.

The guidelines also recommend that crawlers:

Avoid crawling during your peak traffic hours (if a crawler can estimate when those are)

Do not send multiple requests for the same page simultaneously

Limit how deeply they dig into your site to prevent getting stuck in loops

Stay away from areas that require login, have CAPTCHAs, or sit behind paywalls (unless you’ve given explicit permission)

Stick to simple GET requests rather than POST or PUT requests (technical jargon for different ways of requesting web pages)

Be careful about running JavaScript, which can put a significant load on your server

Think of it this way: a polite crawler is like a guest who knocks before entering, stays in the rooms you’ve said are okay to visit, and doesn’t slam all your doors repeatedly until the hinges break.

4. Use Caching Properly

Caching is like taking a photograph instead of visiting the same place repeatedly.

If a crawler visited your homepage yesterday and you haven’t updated it, there’s no reason for it to download the entire page again today.

Websites can send caching instructions that tell crawlers “this page doesn’t change often, so you don’t need to check it again for a week.”

Crawlers must respect these instructions, which reduces unnecessary traffic to your site and saves everyone time and resources.

5. Publish Your IP Addresses

This requirement is new and particularly important.

Crawler operators must publish a list of the IP addresses (the unique numbers that identify computers on the internet) they use for crawling.

This list needs to be in a standardized format and kept reasonably up to date.

A crawler’s IP address helps website owners verify that it is who it claims to be.

Some malicious bots pretend to be Google’s crawler to bypass security measures.

If Google publishes its official IP addresses, you can check whether traffic claiming to be Googlebot actually comes from Google’s servers.

The IP address list should be linked from the crawler’s documentation page and included in the page’s metadata (information about the page that’s readable by machines).

6. Explain What You’re Doing with the Data

Transparency is critical.

Every crawler must provide a documentation page (linked in its user-agent string) that explains:

How the collected data will be used (indexing for search? Training an AI model? Market research?)

How to block the crawler using robots.txt (with specific examples)

How to verify the crawler’s identity

Contact information for the crawler’s operator

If the crawler operator has chosen not to follow any of these best practices, they must explain why on their documentation page.

This is about respect and informed consent. Website owners deserve to know who’s taking their content and what they’re doing with it.

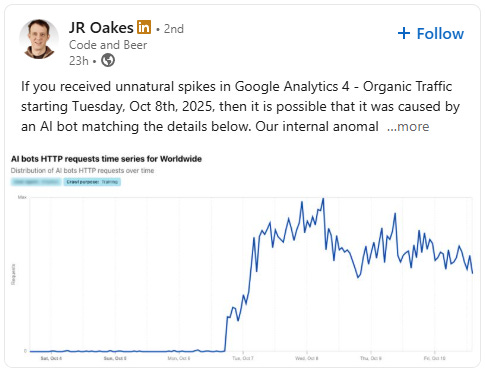

Update: Here is a great example of how bots/crawlers (not necessarily interchangeable) can have negative effects on websites. This may not have caused any site speed issues (or maybe it did), but it did skew valuable data.

Your Takeaway

If you own a website, these guidelines give you more control and protection.

You’ll have clearer ways to identify legitimate crawlers and block problematic ones.

You will also have better tools to prevent crawlers from overwhelming your server and causing downtime.

If you operate a crawler, following these voluntary practices marks you as a responsible player in the ecosystem.

It builds trust with website owners and reduces the likelihood that your crawler will get blocked.

The internet works because of cooperation.

Search engines need content to index, AI companies need data to train their models, and website owners need visitors to sustain their businesses.

Assuming these voluntary guidelines are approved and they are adopted, they will help maintain that balance by ensuring crawlers behave like good citizens rather than digital vandals.